Bayesian paradigm in social intervention research on evidence building

(Din) Ding-Geng Chen

University of North Carolina at Chapel Hill, USA

: Int J Ment Health Psychiatry

Abstract

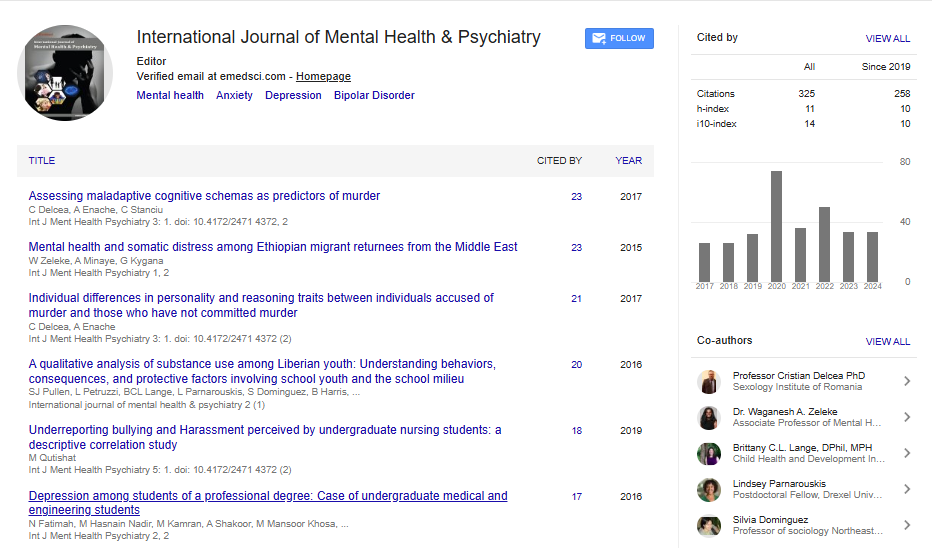

Statement of the Problem: Social interventions are purposefully implemented change strategies and are then intended to follow a design and evaluation process in which activities build on prior information over time. The process is iterative and nonlinear in refining and building new evidences. Although prior information provides successive new evidences, it is rarely considered for data analysis in intervention analyses. This is not consistent to our scientific principle of evidence building and a new paradigm should be explored. Methodology & Theoretical Orientation: We describe a Bayesian perspective on intervention research. Bayesian methods make use of prior information in analyses. In particular, rather than ignoring prior information as in typical intervention analysis, the Bayesian approach to intervention research incorporates prior information from new data distributions based on the Bayes’ theorem. Information from prior studies can be used to formulate a posterior distribution. This posterior distribution is then incorporated in the inferential process. Therefore, a Bayesian approach to intervention research analyzes current study data by drawing on information from previous studies. The Bayesian perspective provides a sequential quantitative method for estimating outcomes in newly obtained data by making use of the previous understanding of intervention effects. Results: With simulation studies and real intervention data analysis, we found that Bayesian models can improve the accuracy of intervention effect-size estimation with smaller standard errors (Figure 1). In simulation studies, the classical frequentist t test yielded 80% power as designed. However, the Bayesian approach yielded 92% statistical power. Conclusion & Significance: From a research design perspective, Bayesian methods have the potential to improve power and reduce required sample sizes in intervention research. If smaller samples could be used, the cost of intervention studies might be reduced, which in turn, could reduce the design demands of intervention research. Recent Publications 1. Chen, D.G., Fraser and Cuddeback (2018) Assurance in Intervention Research: A Bayesian Perspective on Statistical Power. JSSWR. 9(1): 158-173. 2. Chen, D.G. and Fraser, M. W. (2017) A Bayesian Perspective on Intervention Research: Using Prior Information in the Development of Social and Health Programs. JSSWR. 8(3):441-456. 3. Chen, D. G. and Fraser (2017). A Bayesian Approach to Sample Size Estimation and the Decision to Continue Program Development in Intervention Research. JSSWR. 8(3):457-470. 4. Chen, D.G. and Chen, JD (2017). Monte-Carlo Simulation-based Statistical Modelling. Springer.

Biography

(Din) Ding-Geng Chen is an American Statistical Association (ASA) Fellow. He is now the Wallace H Kuralt Distinguished Professor at University of North Carolina at Chapel Hill. He was a Professor of Biostatistics at University of Rochester and the Karl E Peace endowed Eminent Scholar Chair in Biostatistics at Georgia Southern University. He has more than 150 referred professional publications and co-authored/co-edited 23 books on Randomized Clinical Trials, Statistical Meta-Analysis, Public Health Statistical Methods, Causal Inferences, Statistical Monte-Carlo Simulation and Public Health Applications. dinchen@email.unc.edu

Spanish

Spanish  Chinese

Chinese  Russian

Russian  German

German  French

French  Japanese

Japanese  Portuguese

Portuguese  Hindi

Hindi